Each week we go beyond the headlines and explore what AI will mean – for people, for businesses, the future of work, learning and culture, and more.

While two of this week’s stories focus on healthcare, forward-thinking readers will see they are about far bigger shifts that will affect everyone.

Use these examples to ask better questions about “where next?”, and why putting people’s needs at the heart of your AI strategy will be the key to success.

This week, learn from:

NVIDIA and Hippocratic.ai: $9/hour AI healthcare avatar agents

Parallel Live: looking beyond dystopian AI use cases

Google’s HeAR: diagnosing disease from coughs

👩🏽⚕️ NVIDIA and Hippocratic.ai launch $9/hour AI healthcare avatar agents

NVIDIA’s GTC used to be ‘just’ a developer conference. Now it’s a window into our AI future. One of last week’s many launches was its generative AI health agents, phone- and video-based agents that can assist patients with things like risk assessments, pre-op check-ins, post-discharge checkups and chronic illness management.

Your reaction to these agents was something of a Rorschach test, highlighting which of the two AI camps you fall into. Are you team “hooray…AI will liberate us!” or team “shiiiiit….AI will destroy jobs, everything we value, and possibly humanity itself”?

My view? This a perfect example of how AI has the potential to unlock a future of work that is “Less, But Better”.1

There are many obvious gaps that these agents could fill:

The WHO estimates there will be a shortfall of 10 million healthcare jobs by the end of the decade; clinician burnout is a huge issue, with around 40% emotionally exhausted or suffering from burnout.

The AI agents can now perform better than human professionals on a variety of objective and subjective benchmarks

They also unlock new possibilities – 24/7 access, they can speak multiple languages, never rush or speak in overly technical language.

Yet for every gap that an AI agent can fill (equally or even better than humans), there is still a big one that they won’t: human-to-human connection.

Successful healthcare is as much about patients’ emotional experience, as much as it is about their physical outcomes. If you’re diagnosed with cancer, or lose someone close to you, or receive any other terrible news – you want someone to look into your eyes, hold your hand and tell you everything is going to be OK. Or even if not, to just feel your distress and fear.

After Google’s AMIE medical chatbot was released I wrote in VisuAIse Futures: Healthcare :

[while] the quality and empathy of its conversations were rated as significantly better than those with human doctors … we don’t believe that patients will rush towards fully automated healthcare. Unlike Google’s actors, real patients aren’t in a double blind study. People want the accountability and relatability of human clinicians. Mental healthtech app Koko tested using AI to write supportive messages for its users. The data showed people preferred them – right up until they found out they were written by an AI. As its CEO said, “simulated empathy feels weird, empty.”

Whether you work in healthcare or not, it’s useful to remember Rory Sutherland’s ‘doorman fallacy’ here:

If you view a doorman as just opening the doors, then automation will be very attractive. Yet doormen do much more than this – they greet guests, carry bags, give tips and local insights, and more. They elevate the experience and make people feel secure.

Some leaders will see AI agents as a way to reduce their costs. And there will undoubtedly be a place for a low-cost, fully-automated providers (most probably serving patients or customers that are poorly served today, if at all).

Most leaders would be better off asking a different question: how can we deploy these tools in order to take the burden of handling logistics off our people, freeing them to focus on improving our patients’ experience? And, as I explain in The Future Normal of Work: “Less, But Better”, this could also increase wages, rather than destroy jobs.

Two big questions for you to consider here:

💡 How might AI agents handle functional tasks, in order to humanise emotional moments?

💡 What if we could use AI agents to employ fewer human workers, but at higher wages?

Henry will be delivering a keynote on “Designing A People-First AI Strategy for Hospitality” at the Future Hospitality Summit in Riyadh on 30 April.

Interested in having a similar session for your sector or team?

🤳 Parallel Live and looking beyond dystopian AI use cases

I was left open-mouthed at this post on X (Twitter?!) recently about how sleazy wannabe influencers can now use AI to fake viewers, comments and even tips. Yeah, nice. 🤮

But let's explore two more optimistic thoughts about what this means for the future normal:

Digital trust is trending to zero. I posted a video last week of an AI avatar that could take job interviews for you which provoked a strong reaction. But I just can’t see these fakes becoming a significant part of the future normal.

My kids will have zero expectation that seeing someone ‘winning’ online will mean anything. Actually forget your kids – by next year, you won’t trust anything you see online either.

We’re still so early when it comes to imagining all the potential use cases for AI. I'd have never thought of automating a job interview or this sleazy fake influencer idea. But for every unethical and dystopian viral social media video, there will be 10s if not 100s of well-meaning, smart, amazing people working on AI tools that bring us together, help us learn faster, save us time, improve sustainability, and more. Activision’s anti-bullying chat monitoring AI tool is a personal favourite here.

As always, when you see these provocative demos don’t just get mad. Instead, ask yourself:

💡 What will this do to people’s expectations? (This tells you where things are headed).

💡 What would a positive version of this look like? (This helps you do work that matters).

😷 Google HeAR: diagnosing disease from coughs

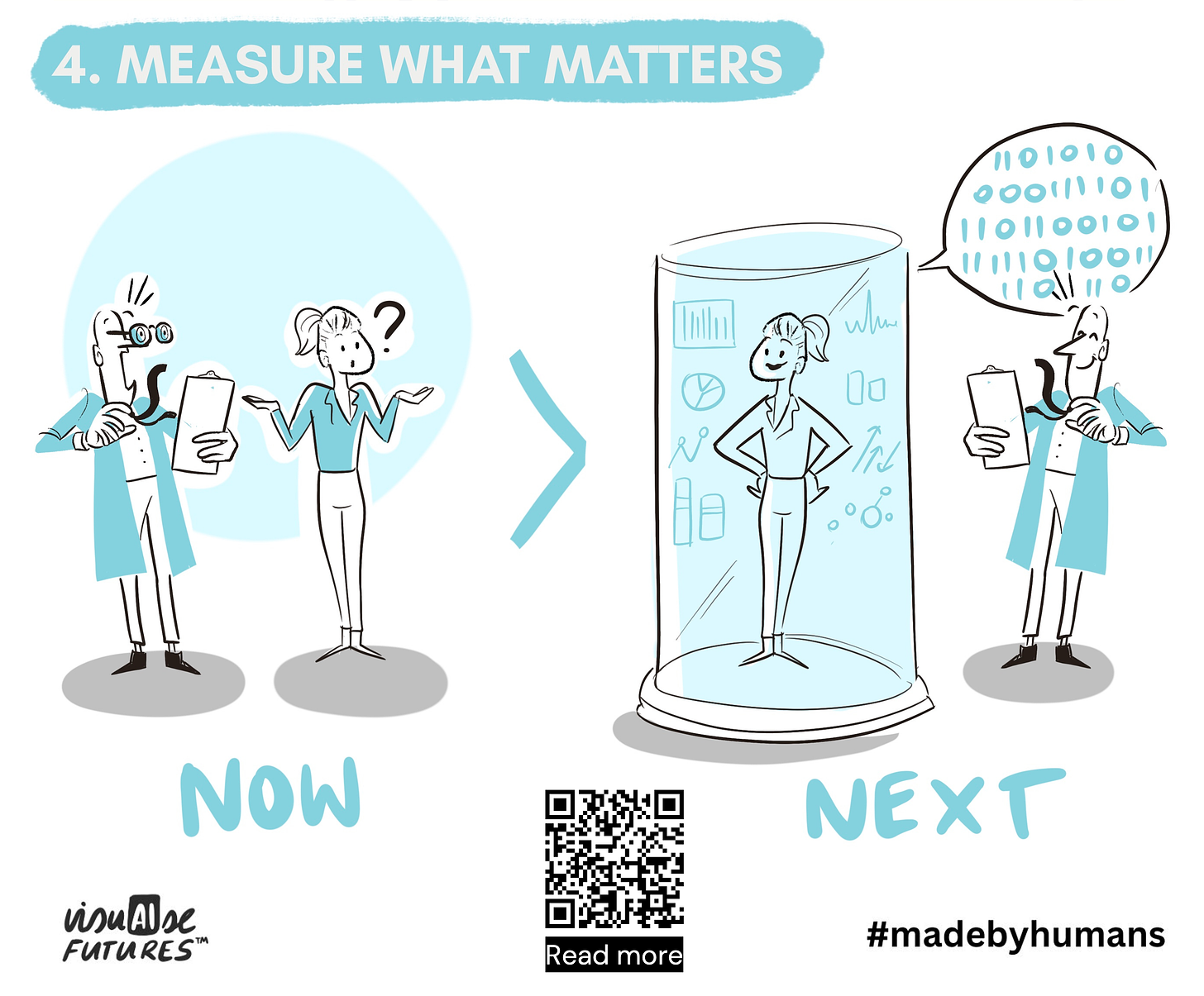

In the full VisuAIse Futures: Healthcare report, we wrote about the need to Measure What Matters, saying:

We’ll use AI to make the invisible, visible – detecting signals and patterns in countless streams of health-related data that no human doctor would be able to, allowing for earlier and more effective interventions. Are you ready for the pre-health revolution?

And barely a month after we published this, Google has launched HeAR (Health Acoustic Representations), a tool that can help to detect and monitor health conditions by evaluating noises such as coughing and breathing.

The interesting tidbit in this story however, is about the data that Google’s researchers used: extracting more than 300 million short sound clips of coughing, breathing, throat clearing and other human sounds from publicly available YouTube videos.

They then used AI to teach the tool to predict the missing portions of sound clips – as Nature observes, “similar to how the large language model that underlies chatbot ChatGPT was taught to predict the next word in a sentence after being trained on myriad examples of human text.” Emerging from this foundation model, the tool appears to be able to predict health conditions such as COVID-19 and tuberculosis.

The other big advantage? Real world data is far more diverse than traditional medical training data – which while high quality is often very limited and can result in biases and blindspots .

The big takeaway, even for those not in healthcare? AI is unlocking entirely new datasets, and helping turn them into meaningful insights.

💡 Gather your team and ask yourselves. What unconventional data sources might we be now able to tap into in order to get more diverse and robust insights? Need some prompting? Just ask my Trend Analyst GPT!

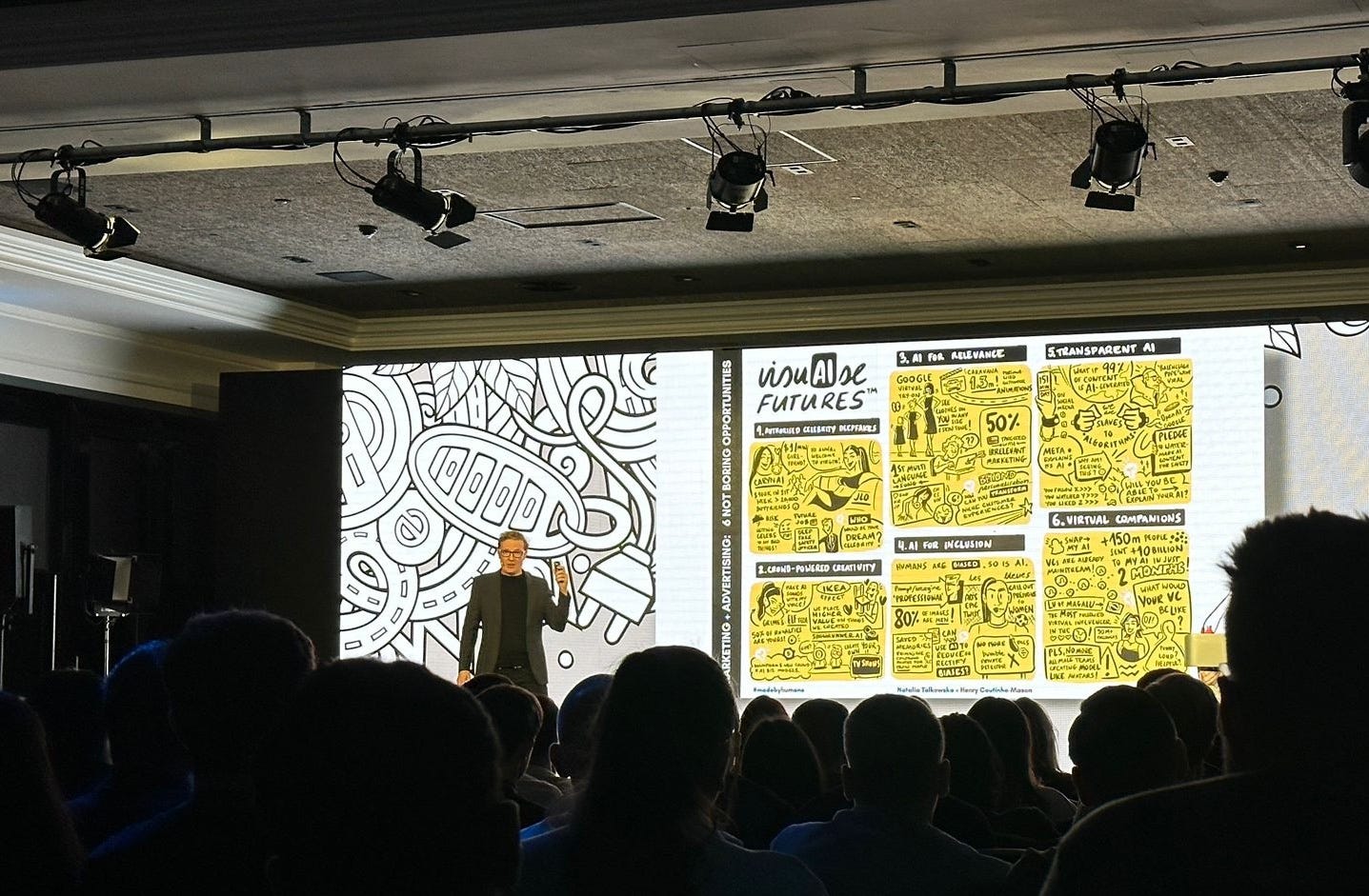

About VisuAIse Futures

VisuAIse Futures is a graphic collaboration between 👩🎨 Natalia Talkowska and 🕵🏻♂️ Henry Coutinho-Mason – two curious humans with 20+ years collective experience of helping large organisations navigate change.

We love helping companies increase the reach and impact of their thinking, so if you want to bring us to run a workshop at your next AI event or meeting, and co-create a custom graphic for your organisation – then let’s chat!

“Inspiring, energetic, and entertaining. Henry's session for 2,000 senior executives from a Fortune 100 healthcare company received the highest rating from the attendees.” Brent Turner, SVP Head of Strategy, Cramer Events

I say potential because none of this is guaranteed. There’s absolutely a dystopian future where less isn’t better – less privacy, less human interaction, and less respect and dignity. We have a choice as to how we deploy these technologies.